Hello, wanted to know if this is how websocket usage looks like? Setup

t3.medium with a gp3 - 3000 IOPS

Postgres, backed by timescaledb-tune(all yes)

9000 Instruments MODE_FULL

This instance is dedicated for streaming, so nothing else apart from the python client[1] + database exist). At this point, no reads have started on the Database too, so it's just ingesting data

Usage

07:21:52 up 2:03, 3 users, load average: 11.93, 11.89, 11.12

Mem: 3836 1802 139 1004 3153 2034

Questions

Should I consider changing the instance type? I have a feeling this gets billed a lot(as I'm using CPU cycles outside of allocation). What's the best one considering this appears to be a CPU intensive task and a bit low on memory.

Should I consider tweaking the IOPS on storage so if that's the bottleneck it alleviates things up a bit

Should I push postgres a bit more? As I mentioned earlier this is timescaledb-tune auto

Good places to learn about profiling applications, databases, designs so I could make better choices.

What's your cloud bill looking like now?

I've shared the pointers on what could be informative to get this discussion going, let me know if you'd need to look at something else. Even generic pointers are welcome!

[1] - 3 partitions processing 3000 instruments each, running in a multiprocessing setup. It's just python + psycopg.

Yeah, T3.medium is probably not ideal for what you're doing. It’s a burstable instance, so once those CPU credits run out (which they will, fast, with that load), performance tanks. That could explain the high load averages you're seeing

You’ll probably get more consistent performance by switching to a compute-optimized instance like c6i.large or c6i.xlarge.

> Should I consider tweaking the IOPS on storage

3k IOPS should be sufficient IMHO but you can check `iostat` and see if there are any bottlenecks

> Good places to learn about profiling applications, databases, designs so I could make better choices.

You can also look at https://github.com/benfred/py-spy to profile your Python program and see if you can play around with the numbers to batch insert the instruments/or spawn more threads for the multiprocessing setup etc. TBH - Go would be better suited for such a task as it can utilise the underlying CPU cores much more, gives better control for concurrency and quite a lot more resource efficient than Python.

I resized it to c6i.large. The current uptime is 04:43:04 up 1:32, 2 users, load average: 9.43, 9.40, 9.38. I'll read up a bit more on load averages(as I've been doing for last couple of years diligently) to see if this time I get it right. btw, does it look okay?

The storage fills up fast too, 9000 instruments for one hour came out to be ~ 4GB, and it's IOPS/throughput do not seem to be causing any issues that I can see. So, increased the storage also

Will compare both timescaledb-tune and pgtune to see postgres works better

I have considered Golang and have started learning it, it'll take some time to re-translate this into golang.

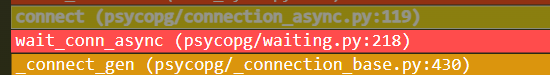

I am running the profiler right now, while I'm still new to flame graphs what I found interesting in the trace is a new db connection is created each time data is inserted, not sure how much performance gain that'd give but I'll try moving it/caching the connection in. The thing is currently it does a async with await pg.AsyncConnection.connect allowing to connect asynchronously and also to release resource on commit, especially the connection. Maybe there exist better implementations for it

Some updates from today, after a bit of modifications and removing bloated validations/logic.

load average: 5.51, 6.01, 5.65

Mem: 3816 1655 112 936 3231 2160

c6i.large

It's doing much better than before, although the reads are very slow.

To-do:

I haven't been able to figure out caching in the async pg connection(it gave a large backtrace on what all things I'm not supposed to do as same thread shares transactions etc., the error logs fill up fast, maybe each thread should create/own it's own connection), so for now it is not changed. I find that psycopg prepares statements, allows some additional functionality that'd make things lightweight, so that would definitely help if we get it right.

There are few areas where I use external libraries like pydantic to ensure typing(Wish python had structs), which now seem to be an absolute overkill. I'll try getting rid of them and replacing with something native

To anyone coming from the future, the golang version is much much better. I just got done with an initial implementation porting the logic from Python to Golang and it seems to be going okay.

Will try to update this thread if things work out properly.

@vmx Usually subscribe to 8600+ instruments everyday but at the end of the day get only a 4.5 gb sqlite file(in FULL MODE). Then I reduce this file by converting raw ticks into 1 min ohlc. Have used both AWS and GCP. Am wondering how you get 4gb every hour

@vmx i use instances with GBPS bandwidth, ample RAM, multicore CPU, SSD disk for high disk i/o. There doesn't seem to be any bottleneck anywhere and yet i don't get 4 gb every hour like you made a mention of. Am I missing incoming ticks? How?

What all things are you storing at your end? Let me re-verify. I do not have the python setup with me anymore. Still fixing up a few things, so I'll get back when I can.

Am discarding the best 5 bid and offer and also all ticks that are before the particular minute during which the data is being streamed. So for example that means I will not accept ticks of 9:30 am at 9:45 am(kite sends past ticks for instruments that trade very thinly and are illiquid)

Hope this helps, you're right, it's about the same. I might not have calculated it properly back then or indexed on things which weren't needed perhaps.

Total rows: 142134767

Size on disk: 780MB

Uncompressed size: 5.13GB

Filesystem Usage: 1.5G total

Also, this is clickhouse and not postgres+timescaledb anymore. Works much better, the writes/reads are extremely fast with similar queries that were run before and few even complicated than before. No indexes as well. It just ran out of the box.

I still do not know where pg was supposed to be tuned.

@vmx the total no. of rows for my db comes in at no more than approx 70 million every day(rare volatile days could take this to 75 million). That's less than half of what you get of about 140 million+ per day.

I don't think there's a database speed issue here because of how my overall architecture is - python receives data from the websocket into memory and after a specific no. of rows is reached its all flushed into sqlite db. The receiver runs in a separate thread and is agnostic of any database operations). So there's no loss of data in the sense that whatever zerodha API is sending, am receiving 100% of it.

On the database side of things your stack is way better. But then my primary goal is not realtime streaming. My compressed file size is far less because am converting all those ticks later on into 1 min OHLC(70 million is hence reduced to approx 1 million rows)

Cool, for me it's just 12.9M ticks, the rest is depth amplified by sell and buy side which if you calculate comes to 140M records(12.9*(10+1)), it's not ticks so to speak. From that POV I might have lesser incoming than you.

> Should I consider changing the instance type

Yeah, T3.medium is probably not ideal for what you're doing. It’s a burstable instance, so once those CPU credits run out (which they will, fast, with that load), performance tanks. That could explain the high load averages you're seeing

You’ll probably get more consistent performance by switching to a compute-optimized instance like c6i.large or c6i.xlarge.

> Should I consider tweaking the IOPS on storage

3k IOPS should be sufficient IMHO but you can check `iostat` and see if there are any bottlenecks

> Should I push postgres a bit more

You can check https://pgtune.leopard.in.ua/ and match the recommended configs here.

> Good places to learn about profiling applications, databases, designs so I could make better choices.

You can also look at https://github.com/benfred/py-spy to profile your Python program and see if you can play around with the numbers to batch insert the instruments/or spawn more threads for the multiprocessing setup etc. TBH - Go would be better suited for such a task as it can utilise the underlying CPU cores much more, gives better control for concurrency and quite a lot more resource efficient than Python.

- I resized it to

diligently) to see if this time I get it right. btw, does it look okay?

diligently) to see if this time I get it right. btw, does it look okay?

- The storage fills up fast too, 9000 instruments for one hour came out to be ~ 4GB, and it's IOPS/throughput do not seem to be causing any issues that I can see. So, increased the storage also

- Will compare both timescaledb-tune and pgtune to see postgres works better

- I have considered Golang and have started learning it, it'll take some time to re-translate this into golang.

- I am running the profiler right now, while I'm still new to flame graphs what I found interesting in the trace is a new db connection is created each time data is inserted, not sure how much performance gain that'd give but I'll try moving it/caching the connection in. The thing is currently it does a

Thank you again for all your pointersc6i.large. The current uptime is04:43:04 up 1:32, 2 users, load average: 9.43, 9.40, 9.38. I'll read up a bit more on load averages(as I've been doing for last couple of yearsasync with await pg.AsyncConnection.connectallowing to connect asynchronously and also to release resource on commit, especially the connection. Maybe there exist better implementations for it- load average: 5.51, 6.01, 5.65

- Mem: 3816 1655 112 936 3231 2160

- c6i.large

It's doing much better than before, although the reads are very slow.To-do:

Will try to update this thread if things work out properly.

Have used both AWS and GCP. Am wondering how you get 4gb every hour

Am discarding the best 5 bid and offer and also all ticks that are before the particular minute during which the data is being streamed. So for example that means I will not accept ticks of 9:30 am at 9:45 am(kite sends past ticks for instruments that trade very thinly and are illiquid)

- Total rows: 142134767

- Size on disk: 780MB

- Uncompressed size: 5.13GB

- Filesystem Usage: 1.5G total

Also, this is clickhouse and not postgres+timescaledb anymore. Works much better, the writes/reads are extremely fast with similar queries that were run before and few even complicated than before. No indexes as well. It just ran out of the box.I still do not know where pg was supposed to be tuned.

I don't think there's a database speed issue here because of how my overall architecture is - python receives data from the websocket into memory and after a specific no. of rows is reached its all flushed into sqlite db. The receiver runs in a separate thread and is agnostic of any database operations). So there's no loss of data in the sense that whatever zerodha API is sending, am receiving 100% of it.

On the database side of things your stack is way better. But then my primary goal is not realtime streaming. My compressed file size is far less because am converting all those ticks later on into 1 min OHLC(70 million is hence reduced to approx 1 million rows)